|

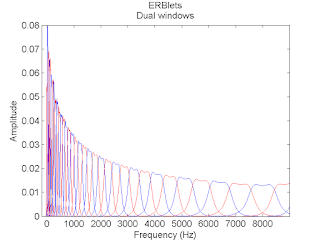

| ERBlet transform dual frame spectrum |

ERBlet

| In short: | A linear and invertible time-frequency transformation adapted to human auditory perception, for masking and perceptual sparsity |

| Etymology: | From the ERB scale or Equivalent Rectangular Bandwidth |

Origin:

Thibaud Necciari, Design and implementation of the ERBlet transform, FLAME 12 (Frames and Linear Operators for Acoustical Modeling and Parameter Estimation), 2012

Time-frequency representations are widely used in audio applications involving sound analysis-synthesis. For such applications, obtaining a time-frequency transform that accounts for some aspects of human auditory perception is of high interest. To that end, we exploit the theory of non-stationary Gabor frames to obtain a perception-based, linear, and perfectly invertible time-frequency transform. Our goal is to design a non-stationary Gabor transform (NSGT) whose time-frequency resolution best matches the time-frequency analysis properties by the ear. The peripheral auditory system can be modeled in a first approximation as a bank of bandpass filters whose bandwidth increases with increasing center frequency. These so-called “auditory filters” are characterized by their equivalent rectangular bandwidths (ERB) that follow the ERB scale. Here, we use a NSGT with resolution evolving across frequency to mimic the ERB scale, thereby naming the resulting paradigm "ERBlet transform". Preliminary results will be presented. Following discussion shall focus on finding the "best" transform settings allowing to achieve perfect reconstruction while minimizing redundancy.Thibaud Necciari with P. Balazs, B. Laback, P. Soendergaard, R. Kronland-Martinet, S. Meunier, S. Savel, and S. Ystad, The ERBlet transform, auditory time-frequency masking and perceptual sparsity, 2nd SPLab Workshop, October 24–26, 2012, Brno

The ERBlet transform, time-frequency masking and perceptual sparsity Time-frequency (TF) representations are widely used in audio applications involving sound analysis-synthesis. For such applications, obtaining an invertible TF transform that accounts for some aspects of human auditory perception is of high interest. To that end, we combine results of non-stationary signal processing and psychoacoustics. First, we exploit the theory of non-stationary Gabor frames to obtain a linear and perfectly invertible non-stationary Gabor transform (NSGT) whose TF resolution best matches the TF analysis properties by the ear. The peripheral auditory system can be modeled in a first approximation as a bank of bandpass filters whose bandwidth increases with increasing center frequency. These so-called “auditory filters” are characterized by their equivalent rectangular bandwidths (ERB) that follow the ERB scale. Here, we use a NSGT with resolution evolving across frequency to mimic the ERB scale, thereby naming the resulting paradigm “ERBlet transform”. Second, we exploit recent psychoacoustical data on auditory TF masking to find an approximation of the ERBlet that keeps only the audible components (perceptual sparsity criterion). Our long-term goal is to obtain a perceptually relevant signal representation, i.e., as close as possible to “what we see is what we hear”. Auditory masking occurs when the detection of a sound (referred to as the “target” in psychoacoustics) is degraded by the presence of another sound (the “masker”). To accurately predict auditory masking in the TF plane, TF masking data for masker and target signals with a good localization in the TF plane are required. To our knowledge, these data are not available in the literature. Therefore, we conducted psychoacoustical experiments to obtain a measure of the TF spread of masking produced by a Gaussian TF atom. The ERBlet transform and the psychoacoustical data on TF masking will be presented. The implementation of the perceptual sparsity criterion in the ERBlet will be discussed.Contributors:

Thibaud Necciari with P. Balazs, B. Laback, P. Soendergaard, R. Kronland-Martinet, S. Meunier, S. Savel, and S. Ystad

Some properties:

Develops a non-stationary Gabor transform (NSGT) [Theory, Implementation and Application of Nonstationary Gabor Frames, P. Balazs et al., J. Comput. Appl. Math., 2011] with resolution evolving over frequency to mimic the ERB scale (Equivalent Rectangular Bandwidth, after B. C. J. Moore and B. R. Glasberg, "Suggested formulae for calculating auditory-filter bandwidths and excitation patterns", J. Acoustical Society of America 74:750-753, 1983). Linear and invertible time-frequency transform adapted to human auditory perception.

Anecdote:A Matlab implementation of the ERBlet transform should appear in 2013 for ICASSP in Vancouver.